Upgrading technology is always a big decision, whether you’re an individual professional, a small business, or a large enterprise. The NVIDIA H200 GPU has been making waves in the computing world as the successor to the already powerful H100, promising better performance, efficiency, and scalability.

But should you actually upgrade to h200? In this guide, we’ll break down what H200 brings to the table, who it’s best suited for, and whether the investment is worth it for your specific needs.

What Is the H200?

The NVIDIA H200 Tensor Core GPU is part of the Hopper architecture family, designed for AI workloads, high-performance computing (HPC), and data-intensive applications. It builds upon the H100 but introduces significant improvements in memory bandwidth, capacity, and performance efficiency.

Some standout features include:

● HBM3e Memory: Faster and more efficient than the H100’s HBM3, offering up to 4.8 terabytes per second of bandwidth.

● Higher Memory Capacity: With 141GB of memory per GPU, H200 supports much larger models and data sets.

● Better Energy Efficiency: Optimised for power consumption, making large-scale AI training more sustainable.

● Enhanced Scalability: Designed to fit seamlessly into multi-GPU clusters, essential for enterprise AI workloads.

How Does the H200 Compare to the H100?

While the H100 was revolutionary when it launched, H200 represents a meaningful step forward in performance and efficiency.

| Feature | H100 | H200 |

| Memory Type | HBM3 | HBM3e |

| Bandwidth | ~3.35 TB/s | ~4.8 TB/s |

| Memory Size | 80GB | 141GB |

| Performance Focus | Training & inference | Larger-scale training, higher efficiency |

The most critical difference is memory capacity and bandwidth. Modern AI models such as large language models (LLMs) with billions of parameters require enormous amounts of memory. H200’s expanded capacity enables users to run larger models with reduced reliance on multi-GPU setups, thereby reducing communication bottlenecks.

Who Should Consider Upgrading?

Not everyone needs to jump on H200 immediately. The decision depends on your workloads and business priorities.

AI Researchers & Developers

If you’re pushing the boundaries of large-scale AI models, H200 is a game-changer. Training massive LLMs, generative AI, or high-resolution simulations will benefit significantly from its increased memory and bandwidth.

Enterprises with Heavy AI Workloads

Companies deploying AI at scale, particularly in industries such as healthcare, finance, and autonomous vehicles, will experience faster time-to-market and reduced infrastructure costs due to H200’s efficiency.

High-Performance Computing (HPC) Centres

H200 is well-suited for scientific research, weather forecasting, molecular modelling, and big data analytics, where simulations demand high throughput and large memory capacity.

Small Businesses & Individual Developers

If you’re not working on cutting-edge AI or HPC workloads, H100 or even earlier models may still be more than sufficient. H200 is an expensive investment, and its true potential is only realised in large-scale, compute-intensive environments.

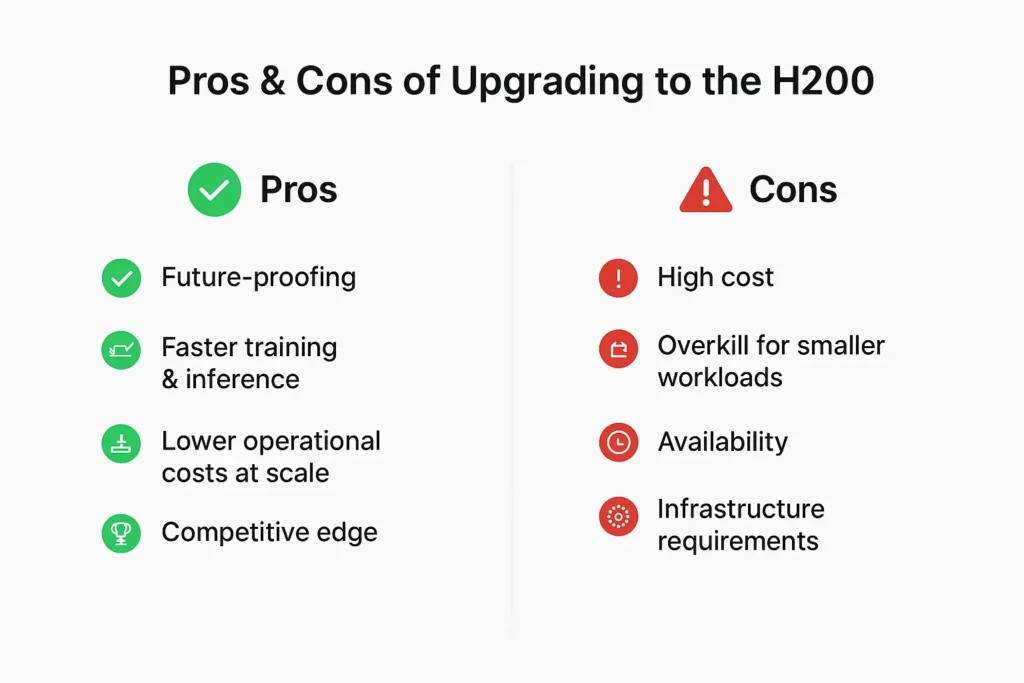

Pros of Upgrading to the H200

● Future-Proofing: As AI models grow larger, H200 provides the headroom needed for the next generation of workloads.

● Faster Training & Inference: Reduced training times mean quicker results and faster product iterations.

● Lower Operational Costs at Scale: Better efficiency translates into savings in data centres where thousands of GPUs run simultaneously.

● Competitive Edge: Organisations adopting H200 can outpace competitors still limited by older GPUs.

Cons of Upgrading to H200

● High Cost: As with any new flagship hardware, H200 commands a premium price.

● Overkill for Smaller Workloads: Many users won’t max out even H100’s capabilities, making the upgrade unnecessary.

● Availability: Early adoption often comes with limited supply and potential wait times.

● Infrastructure Requirements: To take full advantage of H200, you may also need updates to supporting systems (networking, storage, cooling).

Practical Advice: Should You Upgrade?

The decision boils down to your workload and budget.

- If you are a research institution, enterprise AI team, or HPC centre dealing with enormous models and datasets, H200 is a worthwhile upgrade that will likely pay off in efficiency and performance gains.

- If you’re a startup or a smaller team, H100 remains an excellent option. It’s more affordable, still competent, and better suited for most current AI workloads.

- If your work involves general GPU computing, gaming, or light ML, it is unnecessary; consumer GPUs or older data-centre GPUs will likely meet your needs.

Final Thoughts

It is undeniably powerful and pushes the boundaries of what’s possible in AI and HPC. But whether you should upgrade depends on context. For organisations operating at the cutting edge of AI,it is more than just a performance boost; it’s a competitive advantage. For smaller-scale users, however, H100 and other GPUs remain cost-effective, high-performing choices.